Introduction: That Eerie Echo - Why Do Some AI Voices Give Us the Chills?

You press play on a podcast, and the narrator's voice is smooth, clear, and articulate. You ask your smart speaker for the weather, and it responds with cheerful efficiency. You encounter a character in a video game whose dialogue is delivered with near-perfect inflection. In each case, the voice is almost human. Almost. But then, a subtle wrongness creeps in. Perhaps it's a cadence that's just a fraction too even, a pause that doesn't feel quite natural, or an attempt at emotion that doesn't fully land. Suddenly, the feeling of immersion shatters, replaced by a sense of unease, a strange discomfort you can't quite name. Instead of feeling engaged, you feel a little creeped out.

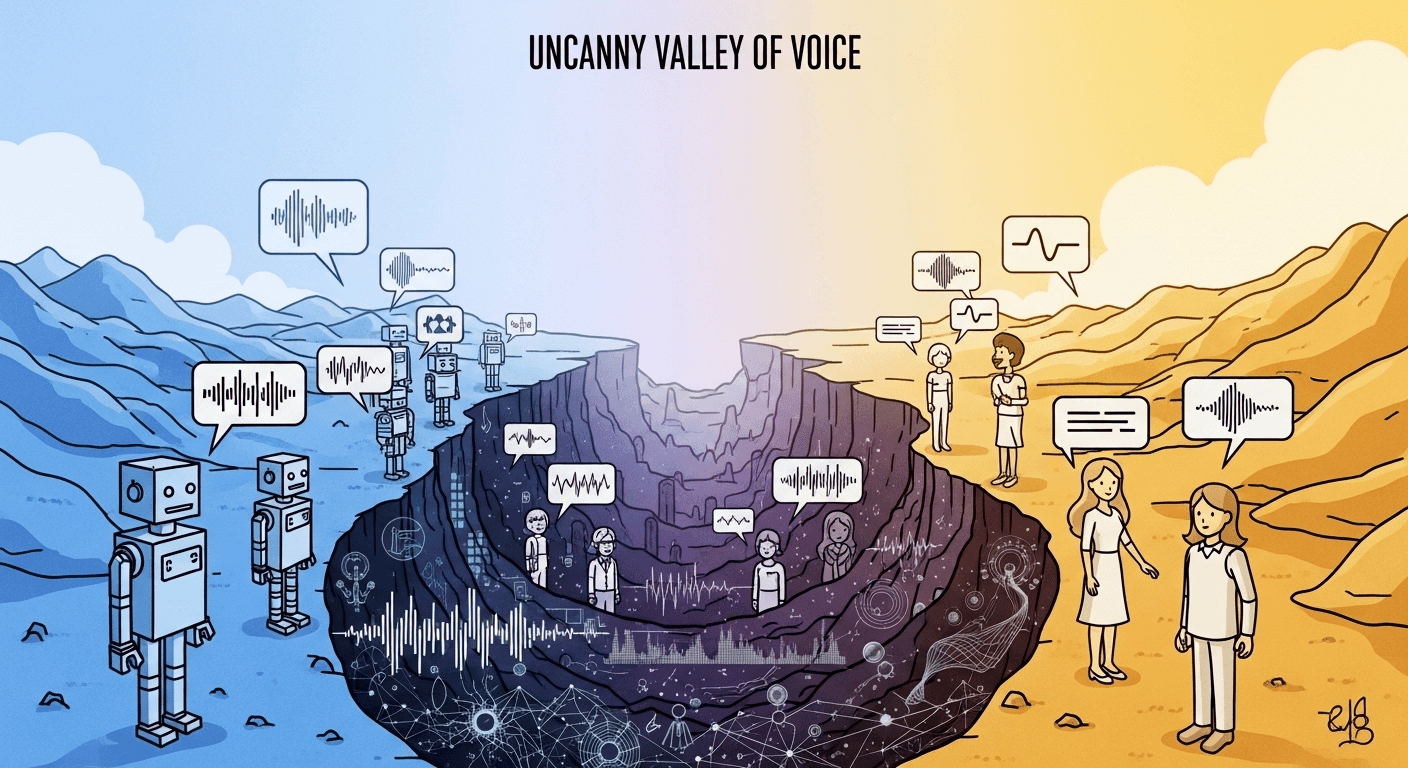

This experience, familiar to anyone who interacts with modern digital media, has a name: the Uncanny Valley of Voice. It is a specific manifestation of a broader psychological phenomenon where artificial entities that are almost human are perceived as more unsettling than those that are either clearly robotic or indistinguishable from a real person.¹ This is not merely a niche technical glitch; it represents a fundamental barrier to creating truly natural, engaging, and trustworthy interactions between humans and machines. For content creators, podcasters, and developers, it is the invisible wall that can make an audience lean in or pull away in revulsion.²

The challenge is more profound than ever. In the past, we mistrusted artificial creations because they looked or sounded "off." Today, as technology advances at a breathtaking pace, we are entering what some call a "second uncanny valley"—one where we mistrust things precisely because they seem too real.³ This new realism creates a fresh kind of unease, blurring the lines between authentic and artificial and forcing us to question what we see and hear. For creators, the stakes are immense. An AI voice that falls into the valley can break a listener's trust, ruin a narrative, and undermine the credibility of the content itself. Overcoming this hurdle is essential for the future of digital communication, entertainment, and human-computer collaboration.²

This report will embark on a comprehensive exploration of this fascinating and critical topic. We will journey back to the origins of the uncanny valley theory, deconstructing the deep-seated psychological reasons for our discomfort. We will then trace the long and complex history of text-to-speech technology, revealing how its very progress led us into this auditory chasm. By dissecting the specific vocal characteristics that trigger the "creepy" feeling, we can understand what developers are up against. Finally, we will explore the cutting-edge techniques being used to climb out of the valley and introduce a new generation of tools, like VocalCopycat, that are engineered to deliver flawlessly natural voices, finally allowing creators to connect with their audiences without the eerie echo of the uncanny.

Deconstructing the Uncanny: From Lifeless Robots to Soulless Voices

To understand why a nearly human voice can be so unsettling, we must first travel back to 1970s Japan, to the mind of a robotics professor who gave a name to this strange sensation. His insights into our relationship with machines laid the groundwork for understanding our modern reactions to everything from CGI characters to AI voice assistants.

The Birth of a Theory: Masahiro Mori's Vision

In 1970, Japanese robotics professor Masahiro Mori published a short but profoundly influential essay titled "Bukimi no Tani," which was later translated as "The Uncanny Valley".⁶ In it, Mori proposed a hypothesis about human emotional responses to robots and other non-human entities. He illustrated his idea with a simple graph that has since become iconic. The graph plots our emotional response, or "affinity," against an entity's degree of human likeness.⁷

Mori's hypothesis states that as a robot's appearance is made more human, our affinity for it grows steadily more positive. An industrial robot arm is neutral. A toy robot like WALL-E is cute and endearing. A humanoid robot is fascinating. This positive correlation continues until we approach a point of near-perfect human realism. At this precipice, our affinity suddenly and dramatically plummets into strong revulsion. This sharp dip is the "uncanny valley".³ Only after crossing this valley, with an entity that is virtually indistinguishable from a healthy human, does our affinity return to a high, positive peak.

Mori noted that movement amplifies the effect dramatically. A still, human-like doll might be slightly eerie, but one that moves with unnatural stiffness or slowness plunges deep into the valley.⁷ To illustrate his point, Mori used the example of a modern prosthetic hand. From a distance, it looks remarkably real. Our affinity is high. But upon shaking it, we discover it is cold, hard, and lacks the subtle give of human flesh. This mismatch between visual expectation and tactile reality creates a macabre, uncanny feeling.¹²

The Psychology Behind the "Creeps": Why We Feel This Way

Mori's observation was insightful, but why does this valley exist? Psychologists and cognitive scientists have proposed several overlapping theories, each shedding light on a different facet of our deep-seated discomfort. These theories suggest that the uncanny feeling is not an arbitrary quirk but a product of powerful evolutionary and cognitive mechanisms. A central theme uniting them is the concept of a perceptual mismatch, where our brain detects a conflict between different cues, leading to a "prediction error" that generates unease.⁷

Key Psychological Theories

Evolutionary Threat and Pathogen Avoidance One of the most powerful theories suggests that the uncanny valley is an evolved survival mechanism. Our ancestors' survival depended on quickly identifying potential threats, including sick or deceased individuals who could carry disease.² An entity that looks almost human but displays subtle abnormalities—such as lifeless eyes, pale skin, or jerky movements—can trigger the same instinctual revulsion we feel towards a corpse or a person with a contagious illness. It is a biological alarm bell, warning us to stay away from something that is "not right" and could be dangerous.⁸ Tellingly, Mori himself placed a "corpse" at the very bottom of his uncanny valley graph, representing the peak of this negative feeling.¹¹

Cognitive Dissonance and Categorical Uncertainty The human brain is a categorization machine. We constantly sort the world into neat boxes: human/non-human, animate/inanimate, friend/foe. An entity that resides in the uncanny valley defies this process. It is neither clearly a machine nor clearly a person, creating a profound sense of ambiguity.³ Our brain struggles to classify it, leading to cognitive dissonance—a state of mental discomfort caused by holding conflicting ideas simultaneously.¹¹ This uncertainty about whether something is alive or dead, real or artificial, is inherently unsettling.¹³

Violation of Human Norms and Empathy Breakdown When we see something that looks human, we subconsciously activate our internal model of a human being, complete with a rich set of expectations about how it should behave, move, and express emotion.² An uncanny entity violates these norms. It might have a photorealistic face but "dead" eyes, or it might speak with perfect grammar but zero emotional warmth. Because it looks so human, we don't judge it as a well-performing robot; we judge it as an abnormal human doing a poor job of seeming normal.⁷ This violation of our expectations can feel soulless or zombie-like, causing our capacity for empathy to break down and be replaced by feelings of strangeness and distrust.²

Theoretical Framework Summary

| Theory Name | Core Principle | Application to Voice |

|---|---|---|

| Pathogen Avoidance | Evolved instinct to avoid sources of disease or death, triggered by subtle physical abnormalities.² | A voice that sounds lifeless, has an unnatural "gurgle," or lacks the warmth and vitality of a healthy human can trigger instinctual revulsion. |

| Cognitive Dissonance | Mental discomfort arising from the inability to categorize an entity as either human or non-human, animate or inanimate.³ | A voice that is neither clearly robotic nor perfectly natural creates unsettling ambiguity. The brain struggles to process it, leading to unease. |

| Violation of Norms | Negative reaction when an almost-human entity fails to meet our subconscious social and behavioral expectations for a human.² | A voice with perfect syntax but flat, inappropriate, or missing emotion is perceived not as a good AI but as a "disturbed" human, causing discomfort. |

Translating the Visual to the Auditory

These psychological principles, though born from observing robots and CGI, apply with equal force to the world of sound. The uncanny valley is not limited to what we see; it is triggered by what we hear as well. A synthetic voice that is almost perfectly human but contains subtle flaws falls into the same trap. We don't hear it as a "good computer voice"; we hear it as a "strange and unsettling person".¹

The critical factor, once again, is the mismatch. Research has repeatedly shown that incongruity between different sensory cues is a powerful trigger for the uncanny feeling. For example, a study found that a robot with a human voice was perceived as significantly eerier than a robot with a matching synthetic voice, or a human with a human voice.⁷ The conflict between the mechanical appearance and the organic sound creates a dissonance that our brains find disturbing. This fundamental principle of mismatched cues is the key to understanding the specific auditory flaws that can plunge an AI voice deep into the uncanny valley.

The Long Road to a Digital Voice: A Brief History of Speech Synthesis

The modern problem of the uncanny valley of voice did not appear overnight. It is the direct result of a centuries-long technological quest to create artificial speech. This journey saw inventors and engineers slowly climb the hill of human-likeness on Mori's graph, moving from clumsy mechanical contraptions to robotic digital tones, and finally to neural networks so realistic they teeter on the edge of the uncanny precipice. Understanding this history is essential to appreciating why the uncanny valley is such a contemporary challenge—we had to get good enough to be creepy.

The Mechanical Age (1700s-1930s)

The dream of a talking machine is surprisingly old. One of the earliest notable attempts was in 1791, when Hungarian inventor Wolfgang von Kempelen unveiled his "Acoustic-Mechanical Speech Machine." This elaborate device used bellows to simulate lungs, reeds for vocal cords, and even models of a tongue and lips to produce consonants and vowels.¹⁴ These early efforts were mechanical marvels, but their sound was far from human. They were novelties, too artificial to be considered uncanny.

The first major step into the electronic age came in 1939, when Bell Labs engineer Homer Dudley demonstrated the VODER (Voice Operation Demonstrator) at the New York World's Fair. This complex machine was the first fully electronic speech synthesizer. It used electronic resonators to create speech-like sounds, but it was incredibly difficult to operate, requiring a trained person to "play" it using a keyboard and foot pedals, much like a musical instrument.¹⁶ The resulting speech was intelligible but unmistakably artificial.

The Digital Revolution (1950s-2000s): From Rules to Recordings

With the advent of computers, the quest for synthetic speech moved into the digital realm. In 1961, an IBM 704 computer at Bell Labs was programmed to "sing" the song "Daisy Bell," an event that became iconic in the history of technology.¹⁵ This era was dominated by rule-based systems, most notably formant synthesis. This technique used algorithms to generate the basic acoustic frequencies (formants) of human speech. The result was the classic, monotonous "robot voice" that many people still associate with early text-to-speech (TTS), famously used by physicist Stephen Hawking.¹⁷ These voices were clearly machines, sitting low on the human-likeness scale and thus avoiding the uncanny valley.

A significant leap in naturalness came in the 1990s with concatenative synthesis.¹⁴ Instead of generating sounds from scratch using rules, this method used a large database of pre-recorded human speech. The audio was sliced into small units (like diphones, the transitions between two sounds), which were then stitched together, or "concatenated," to form new words and sentences.¹⁶ This approach produced voices that were far more natural than their formant-based predecessors. However, the seams were often audible. The rhythm and intonation (prosody) could be choppy and uneven, as the stitched-together pieces didn't always flow smoothly. These voices were climbing Mori's curve—more human-like, but with noticeable flaws that kept them from being truly believable.

The AI Era (2010s-Present): The Dawn of Neural Voices

The last decade has witnessed a revolution in speech synthesis, driven by the rise of artificial intelligence, deep learning, and neural networks.¹⁷ An intermediate step was Statistical Parametric Synthesis (SPS), which used statistical models (like Hidden Markov Models) to generate speech parameters. This offered more flexibility and smoother output than concatenation but could still sound muffled or buzzy.¹⁹

The true turning point came in 2016 with the development of WaveNet by Google's DeepMind.¹⁷ This deep neural network represented a fundamentally new approach. Instead of modeling parameters or stitching together recordings, WaveNet learned to generate the raw audio waveform of human speech, one sample at a time. The result was a dramatic, unprecedented leap in quality and realism.¹⁸ This was the technology that pushed synthetic voices right up to the edge of the uncanny valley. They were now so close to human that the smallest remaining imperfections became glaringly obvious and deeply unsettling.

Modern systems have built on this foundation, using end-to-end models like Tacotron that learn the entire process from text to speech features directly from data, further enhancing fluency and expressiveness.¹⁹ The journey was complete: technology had successfully created voices that were good enough to be creepy.

Evolution Timeline

| Era | Key Technology | Resulting Voice Quality | Relation to Uncanny Valley |

|---|---|---|---|

| Mechanical Era (1700s-1930s) | Bellows, Reeds, Electronic Resonators (VODER)¹⁴ | Highly artificial, non-speech sounds; intelligible but not natural. | Too artificial to be uncanny. Clearly a machine. |

| Early Digital (1950s-1980s) | Rule-Based / Formant Synthesis¹⁷ | Intelligible but distinctly "robotic," monotonous, and lacking prosody. | Clearly a machine voice, not uncanny. |

| Concatenative (1990s-2000s) | Diphone Concatenation (stitching recorded speech)¹⁹ | More natural tone but often disjointed with uneven rhythm and audible "seams." | Begins to approach the valley as it gets closer to human-likeness. |

| Neural AI (2010s-Present) | Deep Neural Networks (WaveNet, Tacotron)¹⁷ | Remarkably realistic, approaching human-level fidelity in tone and clarity. | Falls directly into the uncanny valley, where subtle flaws become highly unsettling. |

The Anatomy of "Creepy": What Makes an AI Voice Fall into the Valley?

We now understand that the uncanny valley is a psychological reaction to near-human entities and that the history of TTS technology has led us directly to its edge. But what are the specific, tangible flaws in a synthetic voice that trigger this unsettling feeling? These are the subtle "tells" that our brains, honed by a lifetime of listening to human speech, detect instantly. They are the "artifacts" that developers and platforms like VocalCopycat are working tirelessly to eliminate.²² The creepiness is not random; it is a predictable response to a specific set of auditory failures.

1. Prosodic Mismatches: The Unnatural Rhythm of Speech

Prosody is the music of language—the rhythm, stress, pitch, and intonation that convey meaning and emotion beyond the words themselves.⁴ It is arguably the most complex and most human aspect of speech, and it is where AI voices most often fail.

Key Prosodic Issues

Unnatural Cadence and Pacing Human speech has a natural, variable flow. We speed up when excited, slow down when thoughtful, and often run sentences together. Many AI voices, in contrast, exhibit a stilted, almost metronomic pace.²³ Every word is given equal weight, and the rhythm is too perfect, too even. This lack of natural cadence is an immediate red flag for our brains.

Flawed Intonation and Emphasis Where we place emphasis in a sentence dramatically changes its meaning. AI voices frequently get this wrong, stressing the wrong word or using a flat, declarative tone for a question. A particularly common flaw is "upspeak," where a statement ends with a rising inflection, making the AI sound perpetually uncertain or questioning.⁴

Awkward Pausing and Latency Pauses are not just empty space; they are crucial communicative tools. We pause for breath, to gather our thoughts, or for dramatic effect. AI voices often lack these realistic micro-pauses, creating a breathless, run-on effect.²³ In conversational AI, latency—the delay between a user speaking and the AI responding—is also a major issue. A delay of even a fraction of a second can disrupt the natural turn-taking rhythm of conversation, making the interaction feel awkward and stilted.¹

2. The Empathy Gap: Emotionally Flat and Contextually Unaware

Human speech is saturated with emotion. Our voices are the primary instruments for conveying happiness, sadness, anger, and empathy. When an AI voice attempts to communicate without this emotional layer, it creates a profound and disturbing disconnect.

A voice that sounds technically perfect but is emotionally flat feels sterile, controlled, and ultimately untrustworthy.²⁴ This is the "soulless" quality that can make an AI feel like a zombie—a core trigger of the uncanny feeling.³ The problem is magnified when there is a mismatch between the content of the words and the emotional tone of the voice. An AI that announces bad news in a cheerful tone or describes an exciting event with deadpan neutrality fails to demonstrate the emotional intelligence and contextual awareness we expect from a human communicator.¹ This failure to produce an appropriate emotional response is deeply unsettling because it violates our fundamental model of how a sentient being should react.

3. The Trap of Inauthentic Perfection

Counterintuitively, a voice that is too perfect can be just as creepy as one with obvious flaws. This is because real human speech is inherently messy. We pause, we stammer, we use filler words like "um" and "ah," we mispronounce words, and our pitch varies.²⁴ These "imperfections" are not errors; they are vital signals of authenticity. They show that we are thinking, processing, and engaging in a live, unscripted act of communication. They make us sound vulnerable and, therefore, human.

An AI voice that is stripped of these imperfections—one that has flawless enunciation, a perfectly steady pitch, and no hesitations—can feel hollow and inauthentic. This "sterile perfection" can be perceived as controlled, calculated, and even manipulative.²⁴ We subconsciously recognize that no real human speaks this way, which creates a barrier of distrust. The perfection itself becomes the flaw, a clear signal of the voice's artificial origin.

4. The Mismatch Problem: When Voice and Context Clash

As established earlier, a mismatch between different perceptual cues is a primary driver of the uncanny valley. This applies strongly to the relationship between a voice and its source or context.

Types of Mismatches

Voice-Visual Mismatch A highly realistic, human-sounding voice emanating from a character that is clearly a robot can be deeply eerie. The brain receives conflicting signals: the eyes see a machine, but the ears hear a person. This incongruence is difficult to resolve, leading to discomfort.⁷ The same is true for CGI characters whose facial expressions or lip movements are not perfectly synchronized with their speech.²⁶

Voice-Context Mismatch The style of a voice must match its situation. A formal, booming announcer voice used for a casual, intimate conversation feels jarring and out of place.⁵ A recent example of this was the controversy surrounding OpenAI's "Sky" voice assistant. Many listeners felt it was uncannily similar to the voice of actress Scarlett Johansson, particularly her role as a sentient AI in the film Her. This created a powerful mismatch between the expectation of a generic, anonymous assistant and the reality of a specific, famous, and culturally loaded persona, generating significant unease.²⁷

These flaws are not all equal in their power to disturb. A simple mispronunciation can be easily overlooked, but errors in prosody and emotion are far more unsettling. This is because rhythm and emotional tone are fundamental to human communication, operating on a deep, subconscious level. When an AI voice gets these wrong, it doesn't just make a mistake; it violates the very essence of what makes speech feel human, plunging it straight into the uncanny valley.

Climbing Out of the Valley: The Cutting-Edge of Voice AI

For decades, the primary goal of speech synthesis was intelligibility—could the machine be understood? Today, the goal has shifted dramatically. The new frontier is not just intelligibility but believability, authenticity, and emotional resonance.²⁴ Researchers and engineers are no longer just trying to make voices that sound correct; they are trying to create voices that feel real. This requires a new arsenal of sophisticated techniques aimed squarely at solving the problems of prosody, emotion, and context that define the uncanny valley.

1. Advanced Prosody and Emotion Modeling

The most significant advances are happening in the modeling of the "music" of speech. Instead of treating speech as a simple string of sounds (phonemes), state-of-the-art systems are learning to understand and replicate its higher-level structures.

Key Technological Advances

Sophisticated Prosody Modeling The latest research focuses on explicitly modeling prosody to ensure it aligns with the content and context of the speech. For example, some methods use a prosody-enhanced acoustic pre-training stage, where a model is first trained on a massive dataset to become an expert in generating high-quality acoustic features. In a second stage, this acoustic system is frozen, and a separate framework is used to model and adapt the prosody based on the script, the desired dubbing style, or even visual cues like lip movements and character emotions from a video.²⁸ This acoustic-disentangled approach allows for precise, independent control over the voice's sound and its rhythm, preventing the two from corrupting each other.

Nuanced Emotion Simulation Early emotional TTS was limited to a few basic, categorical emotions like "happy," "sad," or "angry." This often resulted in caricatured, unnatural performances. The cutting edge of emotion synthesis involves controlling emotion along continuous dimensions. Drawing from psychological theories, models can now be controlled using a vector based on dimensions like Pleasure, Arousal, and Dominance (the PAD model).²⁹ By adjusting these three values, a creator can synthesize a vast and subtle spectrum of emotional states—like "anxious," "excited," or "relaxed"—that are far more nuanced and believable than simple categories.²⁹

Flexible Style Transfer To capture the full diversity of human expression, models are being designed to better encode and transfer stylistic information from a reference audio clip. Techniques like StyleMoE (Mixture of Experts) use specialized "expert" sub-networks, each trained on different kinds of speech, to better capture the full range of timbre, emotion, and prosody in the style space. This allows the model to generate more expressive and varied speech from unseen reference voices.³¹

2. The Power of Large-Scale, Multimodal Data

The remarkable leap in AI voice quality is fueled by two key ingredients: massive datasets and a richer understanding of context.

Data and Context Innovations

Training on a Global Scale Modern TTS models are trained on vast, multi-domain corpora of human speech, often containing tens of thousands of hours of audio from countless speakers.¹⁷ This sheer volume of data allows the neural networks to learn the incredibly complex and subtle patterns of natural language.

Multimodal Learning The most advanced systems are moving beyond just text and audio. Multimodal models incorporate other data streams, such as video. By analyzing a character's facial expressions and lip movements, a model can generate a voice track that is not only emotionally appropriate but also perfectly synchronized, which is critical for high-quality dubbing and avatar animation.²⁸

Contextual Conversational Models To solve the problem of stilted, unnatural back-and-forth dialogue, researchers have developed Conversational Speech Models (CSM). Instead of generating each utterance in isolation, these models leverage the history of the conversation—including the tone, rhythm, and emotional context of previous lines—to produce a response that is more coherent and natural. They often use a single, end-to-end transformer architecture to make this process more efficient and expressive.⁵

3. Embracing "Authentic Imperfection"

Perhaps the most fascinating development is the intentional re-introduction of human-like "flaws." Developers have realized that the pursuit of sterile, technical perfection leads directly into the uncanny valley.²⁴ To create believable voices, they must create voices that are imperfect in an authentic way. This means designing systems that can incorporate natural-sounding pauses, hesitations, stammers, and breaths into the synthesized speech.²⁴ This is not a matter of adding random noise; it requires a deep, data-driven understanding of the linguistic and psychological function of these disfluencies, allowing the AI to sound not robotic, but genuinely human.

4. Architectural Innovations: Transformers, Diffusion, and Vocoders

These conceptual breakthroughs are made possible by powerful new AI architectures.

Technical Architecture

Transformers The same architecture that powers large language models like GPT is now central to speech synthesis. Transformers are exceptionally good at understanding long-range context, allowing them to model the relationships between words and phrases across long passages of text and audio, leading to more coherent and natural prosody.⁵

Diffusion Models This class of generative models has proven to be highly effective at creating rich, detailed outputs. In speech, diffusion models are used to generate high-quality acoustic features (like spectrograms) that closely approach the quality of human speech.²⁸

Advanced Vocoders The final, critical step in TTS is converting the model's abstract acoustic representation into an actual audio waveform. This is the job of a vocoder. Modern neural vocoders (like WaveRNN or HiFi-GAN) have replaced older, more robotic-sounding methods. They are essential for producing the final, high-fidelity audio that we hear, ensuring it is clear, rich, and free of digital artifacts.¹⁹

A common thread running through all these advancements is a strategy of disentanglement. Early TTS systems treated speech as a monolithic block of sound. The new paradigm is to break speech down into its constituent parts—content (what is said), speaker identity (timbre), prosody (rhythm and intonation), and emotion—and model them as separate, controllable elements.²⁸ This modular approach is what gives creators fine-grained control, allowing them to mix and match elements to craft the perfect vocal performance for any context, providing a powerful toolkit for finally escaping the uncanny valley.

Navigating the Landscape: A Creator's Guide to Modern Voice AI Tools

The explosion of innovation in speech synthesis has led to a crowded and often confusing marketplace. A host of AI voice generators now compete for the attention of content creators, each offering a unique blend of features, quality, and pricing.³⁶ For podcasters, video producers, and developers, choosing the right tool is critical. The decision often comes down to navigating a complex trade-off between raw vocal quality and practical workflow efficiency. To understand where a solution like VocalCopycat fits, it is essential to first understand the landscape defined by its leading competitors.

Introducing the Key Players

Among the dozens of available tools, two names have consistently emerged as market leaders, setting the standard for what creators expect from high-end voice AI.

ElevenLabs

Widely regarded as a benchmark for voice quality, ElevenLabs has built its reputation on producing exceptionally realistic and emotionally expressive synthetic voices.³⁸ Its deep learning models are renowned for their ability to capture human-like intonation and inflection, making the platform a top choice for applications where naturalness is the highest priority, such as audiobook narration and character performance.⁴³

However, this pursuit of peak realism is not without its drawbacks. Users frequently report the presence of subtle but frustrating artifacts—occasional mispronunciations, unnatural pauses, or glitches in inflection that break the illusion of reality.²² Correcting these flaws often requires time-consuming manual editing and re-generation, disrupting the creative process. Furthermore, its premium pricing can be a significant consideration for independent creators and smaller businesses.⁴⁵

Murf AI

Murf AI has carved out a strong position as a comprehensive, user-friendly platform for professional voiceovers.⁴⁴ Its key strength lies in its all-in-one "studio" environment, which combines voice generation with tools for editing, adding background music, and fine-tuning audio. This makes it an excellent choice for teams and for projects like corporate training videos or advertisements where workflow efficiency and collaboration are paramount.⁴³

Murf offers a large library of voices across many languages and accents. However, while its voice quality is high, it is often perceived by users as being slightly less natural and having a more discernible "synthetic" edge compared to the ultra-realism of ElevenLabs.⁴³

The Creator's Dilemma

This competitive dynamic presents a clear dilemma for the modern content creator. They are often forced to choose between two imperfect options, navigating a trade-off between ultimate quality and practical usability.

On one hand, a tool like ElevenLabs offers the promise of unparalleled realism, but this can come at the cost of a frustrating and inefficient workflow. Creators may spend hours hunting down and fixing the small artifacts that prevent the audio from being perfect, turning them from storytellers into audio engineers.

On the other hand, a tool like Murf AI offers a streamlined, efficient workflow, but may require a slight compromise on that final, crucial mile of vocal naturalness. The voice might be good, but not quite convincing enough for the most demanding applications.

This creates a significant gap in the market. Creators are looking for a solution that resolves this dilemma—a platform that delivers the artifact-free, top-tier realism of the best models combined with a workflow that is efficient, accessible, and designed with the creator's time in mind. It is this specific need that has paved the way for a new generation of tools engineered to provide the best of both worlds.

The VocalCopycat Solution: Superior Synthesis Without the Side Effects

In a market defined by the trade-off between quality and workflow, VocalCopycat emerges as a platform engineered from the ground up to resolve this central dilemma for creators. It is designed for users who demand the highest standard of vocal realism but cannot afford the time-consuming post-production and artifact correction that often come with it.²² By directly addressing the root causes of the uncanny valley, VocalCopycat provides a solution that respects both the art of voice and the value of a creator's time.

1. Solving the Artifact Problem: The Core of the Uncanny Valley

The "creepy" feeling of the uncanny valley is triggered by specific, identifiable flaws. The unnatural pauses, robotic inflections, and jarring mispronunciations that plague many AI voices are the very artifacts that VocalCopycat's core technology is built to eliminate.²² The platform's proprietary neural voice technology utilizes more sophisticated models that have a deeper understanding of natural speech patterns. This focus on consistency is particularly crucial for long-form content like podcasts or audiobooks, where other tools can begin to exhibit quality degradation over time.²²

The result is a tangible improvement in workflow efficiency. Creators using VocalCopycat have reported a remarkable 70% reduction in post-editing time compared to other leading voice AI platforms.²² This is not just a marginal improvement; it represents a fundamental shift in the creative process. By delivering clean, artifact-free audio from the start, the platform frees creators from the tedious cycle of re-generation and manual correction, allowing them to focus on their primary goal: creating compelling content.

2. Democratizing High-Fidelity Voice Cloning

Another significant barrier for many creators has been the high technical and resource requirements for professional-grade voice cloning. Competitors often require 30 minutes or more of clean, studio-quality audio to produce a convincing voice replica, a demand that is impractical for many.²² VocalCopycat's more efficient models are designed to overcome this hurdle. The system can generate high-quality, convincing voice clones from much shorter audio samples, dramatically lowering the barrier to entry.²² This efficiency makes truly personalized, professional-grade voice technology accessible to a far wider range of creators, from independent podcasters to small marketing teams.⁴⁶

3. A Creator-First Platform: Pricing and Usability

The final piece of the puzzle is accessibility. The most advanced AI voice technology has often been locked behind enterprise-level pricing, putting it out of reach for the very creators who could benefit from it most.²² VocalCopycat's business model is explicitly designed to address this "prohibitive pricing." By offering more competitive rates while delivering what it positions as superior, artifact-free results, the platform aims to democratize access to premium voice synthesis.²² This creator-first approach ensures that individuals and small businesses are no longer forced to choose between affordability and professional quality.

Platform Comparison

The following table provides a clear, at-a-glance comparison, distilling the key differences between these platforms on the dimensions that matter most to content creators.

| Feature/Attribute | VocalCopycat | ElevenLabs | Murf AI |

|---|---|---|---|

| Voice Realism & Artifacts | Superior synthesis with significantly fewer artifacts; designed for long-form consistency.²² | Exceptionally realistic but can be prone to artifacts requiring manual correction and re-generation.⁴⁵ | High-quality but can have a subtle synthetic edge; often perceived as less natural than market leaders.⁴⁵ |

| Voice Cloning Efficiency | High-quality clones from short audio samples, lowering the barrier to entry for creators.²² | Requires longer audio samples (e.g., minutes) for best results, posing a higher demand on users.⁴⁷ | Voice cloning is available, typically on higher-tier plans, with a focus on usability within its studio.⁴³ |

| Core Focus | Creator Workflow & Quality: Delivering artifact-free, premium voices to maximize efficiency.²² | Raw Voice Realism: Pushing the boundaries of naturalness and emotional expression.⁴³ | All-in-One Voiceover Studio: Providing a comprehensive, user-friendly platform with collaboration tools.⁴³ |

| Pricing Model | Creator-first, accessible pricing designed to democratize premium technology.²² | Premium pricing tiers that can be a significant investment for advanced features and high usage.⁴⁵ | Feature-based subscription tiers with a focus on providing value for business and team use cases.⁴⁴ |

Conclusion: The Future is Heard, Not Just Seen

The journey into the uncanny valley of voice reveals a fascinating intersection of psychology, technology, and art. What began with Masahiro Mori's observations of near-human robots has become a central challenge in the age of artificial intelligence. The "creepy" feeling elicited by an almost-human voice is not a random glitch but a predictable, deeply human response to identifiable flaws—the unnatural rhythms, the emotional disconnects, and the unsettling perfection that violate our subconscious expectations of speech. For decades, the evolution of text-to-speech technology was a steady climb toward realism, a journey that inadvertently led us right to the edge of this unsettling chasm.

Today, however, the industry is at a turning point. The standard is no longer "good enough" or merely intelligible speech. Fueled by breakthroughs in neural networks, large-scale data, and a more nuanced understanding of what makes a voice feel authentic, developers are finally learning how to climb out of the valley. The new benchmark is emotionally resonant, context-aware, and believable speech that is free from the immersion-breaking artifacts that have frustrated creators and audiences alike.

Platforms at the forefront of this movement are redefining what is possible. VocalCopycat, engineered specifically to solve the core problems that create the uncanny valley, represents this new standard. By focusing on eliminating the subtle artifacts that disrupt a listener's trust and streamlining the creative workflow, it offers a solution that combines superior quality with practical efficiency. It is built on the principle that creators should be able to leverage the power of AI without becoming audio engineers, and that audiences deserve to hear stories and content delivered with the flawless, natural cadence of a truly human voice.

The future of digital interaction will be heard as much as it is seen. As AI voices become more integrated into our daily lives—from our assistants and entertainment to our educational tools and personal communications—their ability to connect with us on a human level will be paramount. Don't let your stories get lost in the valley. Experience the difference of artifact-free, truly natural AI voice. Try VocalCopycat today and let your content be heard, perfectly.

References

-

The Uncanny Valley of Voice: Why Some AI Receptionists Creep Us Out. MyAI Front Desk. Accessed June 24, 2025. https://www.myaifrontdesk.com/blogs/the-uncanny-valley-of-voice-why-some-ai-receptionists-creep-us-out

-

Uncanny Valley: Examples, Effects, & Theory. Simply Psychology. Accessed June 24, 2025. https://www.simplypsychology.org/uncanny-valley.html

-

Uncanny Valley. REMO Since 1988. Accessed June 24, 2025. https://remosince1988.com/blogs/stories/uncanny-valley

-

The Uncanny Valley: AI's Struggle with Naturalness in Voices. SWAGGER Magazine. Accessed June 24, 2025. https://www.swaggermagazine.com/technology/the-uncanny-valley-ais-struggle-with-naturalness-in-voices/

-

Crossing the uncanny valley of conversational voice. Sesame. Accessed June 24, 2025. https://www.sesame.com/research/crossing_the_uncanny_valley_of_voice

-

"Not Human Enough: The Origins of the Uncanny Valley" by Franklin. University of Richmond Scholarship Repository. Accessed June 24, 2025. https://scholarship.richmond.edu/osmosis/vol2025/iss1/6/

-

Uncanny valley. Wikipedia. Accessed June 24, 2025. https://en.wikipedia.org/wiki/Uncanny_valley

-

Uncanny Valley: Why Realistic CGI and Robots Are Creepy. Verywell Mind. Accessed June 24, 2025. https://www.verywellmind.com/what-is-the-uncanny-valley-4846247

-

The uncanny valley: does it happen with voices? Trevor Cox. Accessed June 24, 2025. http://trevorcox.me/the-uncanny-valley-does-it-happen-with-voices

-

Have We Bridged the Uncanny Valley? Science Museum of Virginia Blog. Accessed June 24, 2025. https://smv.org/learn/blog/have-we-bridged-uncanny-valley/

-

Investigating the 'uncanny valley' effect in robotic, human and CGI movement. Student Journals - Sheffield Hallam University. Accessed June 24, 2025. https://studentjournals.shu.ac.uk/index.php/enquiry/article/download/111/111/332

-

Discovering the uncanny valley for the sound of a voice. Tilburg University. Accessed June 24, 2025. http://arno.uvt.nl/show.cgi?fid=149554

-

A mismatch in the human realism of face and voice produces an uncanny valley. PMC. Accessed June 24, 2025. https://pmc.ncbi.nlm.nih.gov/articles/PMC3485769/

-

A History of Text-to-Speech: From Mechanical Voices to AI Assistants. Vapi. Accessed June 24, 2025. https://vapi.ai/blog/history-of-text-to-speech

-

Speech synthesis. Wikipedia. Accessed June 24, 2025. https://en.wikipedia.org/wiki/Speech_synthesis

-

Text-to-Speech Technology (Speech Synthesis). The ANSI Blog. Accessed June 24, 2025. https://blog.ansi.org/text-to-speech-technology-speech/

-

From Text to Speech: The Evolution of Synthetic Voices. IgniteTech. Accessed June 24, 2025. https://ignitetech.ai/about/blogs/text-speech-evolution-synthetic-voices

-

Voice synthesis - A short history of a technical prowess. Theodo Data & AI. Accessed June 24, 2025. https://data-ai.theodo.com/en/technical-blog/how-i-built-ai-powered-phone-answering-machine-voice-synthesis-history-part-2

-

Text-to-Speech Technology: How It Works and Why It Matters in 2025. Voiceflow. Accessed June 24, 2025. https://www.voiceflow.com/blog/text-to-speech

-

The Journey of Text-to-Speech: From Robotic Voices to AI-Powered Realism. CAMB.AI. Accessed June 24, 2025. https://www.camb.ai/blog-post/the-journey-of-text-to-speech-from-robotic-voices-to-ai-powered-realism

-

What is Text to Speech? IBM. Accessed June 24, 2025. https://www.ibm.com/think/topics/text-to-speech

-

VOCALCopyCat: Voice generation and cloning cheaper and better than 11Labs. Product Hunt. Accessed June 24, 2025. https://www.producthunt.com/products/vocalcopycat

-

Why does AI Voice generation sound so "uncanny valley" and "inorganic"? Reddit. Accessed June 24, 2025. https://www.reddit.com/r/aiwars/comments/1iiy0yj/why_does_ai_voice_generation_sound_so_uncanny/

-

The Uncanny Valley of AI Voice: Why Imperfection Matters. Wayline. Accessed June 24, 2025. https://www.wayline.io/blog/ai-voice-uncanny-valley-imperfection

-

Speech Synthesis and Uncanny Valley. ResearchGate. Accessed June 24, 2025. https://www.researchgate.net/publication/268207867_Speech_Synthesis_and_Uncanny_Valley

-

Voices from the Uncanny Valley. Digital Culture & Society. Accessed June 24, 2025. http://digicults.org/files/2019/11/dcs-2018-0105.pdf

-

Alexa, Who Am I? The Uncanny Valley of AI-Generated Voices. Techquity India. Accessed June 24, 2025. https://www.techquityindia.com/alexa-who-am-i-the-uncanny-valley-of-ai-generated-voices/

-

Prosody-Enhanced Acoustic Pre-training and Acoustic-Disentangled. arXiv. Accessed June 24, 2025. https://arxiv.org/pdf/2503.12042

-

arXiv:2409.16681v2 [eess.AS] 8 Apr 2025. arXiv. Accessed June 24, 2025. https://arxiv.org/pdf/2409.16681

-

Going Retro: Astonishingly Simple Yet Effective Rule-based Prosody Modelling for Speech Synthesis Simulating Emotion Dimensions. arXiv. Accessed June 24, 2025. https://arxiv.org/abs/2307.02132

-

Style Mixture of Experts for Expressive Text-To-Speech Synthesis. arXiv. Accessed June 24, 2025. https://arxiv.org/abs/2406.03637

-

AI Evolution: The Future of Text-to-Speech Synthesis. Veritone Voice. Accessed June 24, 2025. https://www.veritonevoice.com/blog/future-of-text-to-speech-synthesis/

-

Sparse Alignment Enhanced Latent Diffusion Transformer for Zero-Shot Speech Synthesis. arXiv. Accessed June 24, 2025. https://arxiv.org/html/2502.18924v1

-

Towards Controllable Speech Synthesis in the Era of Large Language Models: A Survey. arXiv. Accessed June 24, 2025. https://arxiv.org/html/2412.06602v1

-

Incredible Demo of AI voice to voice model: Crossing the uncanny valley of conversational voice. Reddit. Accessed June 24, 2025. https://www.reddit.com/r/Futurology/comments/1j175qw/incredible_demo_of_ai_voice_to_voice_model/

-

Best AI Video and Voice Generation Tools in 2025. Bureau Works. Accessed June 24, 2025. https://www.bureauworks.com/blog/best-ai-video-and-voice-generation-tools-in-2025

-

The 7 Best AI Voice Generators to Explore in 2025. Edcafe AI. Accessed June 24, 2025. https://www.edcafe.ai/blog/ai-voice-generators

-

The Best AI Voice Generator Tools (2025) + 15 Voice Samples. Saastake. Accessed June 24, 2025. https://saastake.com/the-best-ai-voice-generator/

-

Best AI Voice Generators of 2025: A Review and Comparison. Vodien. Accessed June 24, 2025. https://www.vodien.com/learn/best-ai-voice-generator/

-

ElevenLabs and Murf.ai are making millions with open source groundwork. Reddit. Accessed June 24, 2025. https://www.reddit.com/r/SaaS/comments/1hrv2o5/elevenlabs_and_murfai_are_making_millions_with/

-

I tested 3 AI voice tools — this one was the most realistic (with free version). Reddit. Accessed June 24, 2025. https://www.reddit.com/r/audiobooks/comments/1kcbqy5/i_tested_3_ai_voice_tools_this_one_was_the_most/

-

10 Best AI Voice Generators of 2025 (Free & Paid Tools). Demand Sage. Accessed June 24, 2025. https://www.demandsage.com/ai-voice-generators/

-

Murf AI vs ElevenLabs: Ultimate AI Voice Comparison 2025. FahimAI. Accessed June 24, 2025. https://www.fahimai.com/murf-ai-vs-elevenlabs

-

Murf Ai vs Elevenlabs: Tried Both & Here's the Winner [2025]. Murf.ai. Accessed June 24, 2025. https://murf.ai/compare/murf-ai-vs-elevenlabs

-

ElevenLabs vs. Murf AI: A Comprehensive Comparison. Foundation Marketing. Accessed June 24, 2025. https://foundationinc.co/lab/elevenlabs-vs-murf-ai/

-

Vocalcopycat is now on Microlaunch. Microlaunch. Accessed June 24, 2025. https://microlaunch.net/p/vocalcopycat

-

ElevenLabs vs Murf. Cartesia. Accessed June 24, 2025. https://cartesia.ai/vs/elevenlabs-vs-murf